An artificial intelligence system used to grade standardized tests in Massachusetts incorrectly scored approximately 1,400 student essays, a technical glitch that has impacted nearly 200 school districts across the state. The error, which in some cases assigned scores of zero to well-written work, was discovered by a teacher and has since prompted a full review and rescoring of the affected exams.

The Massachusetts Department of Elementary and Secondary Education (DESE) has notified 192 school districts that students' Massachusetts Comprehensive Assessment System (MCAS) scores were impacted. Following a review by the state's testing contractor, all affected essays have been rescored by human graders, resulting in higher scores for every student involved.

Key Takeaways

- A technical glitch with an AI grading tool incorrectly scored about 1,400 MCAS student essays.

- The error affected 192 school districts across Massachusetts.

- The issue was first identified by a teacher who noticed a student's high-quality essay received a score of zero.

- All impacted essays have been manually rescored, and every corrected score was higher than the original AI-generated one.

- The incident has raised concerns among parents and educators about transparency, bias, and the appropriateness of using AI for subjective assessments.

Discovery of a Widespread Error

The problem first came to light when a teacher in Lowell was reviewing her students' essays over the summer. She noticed a significant discrepancy: an essay she believed deserved a score of six out of seven points had been given a zero by the automated system. This observation was the catalyst for a broader investigation.

After the teacher alerted her district leaders, they contacted DESE, which initiated a review with its testing vendor. The investigation confirmed a systemic technical issue, leading to the identification of hundreds of similar errors across the state. Officials have confirmed that no student's score was lowered as a result of the correction process; every rescore was an improvement.

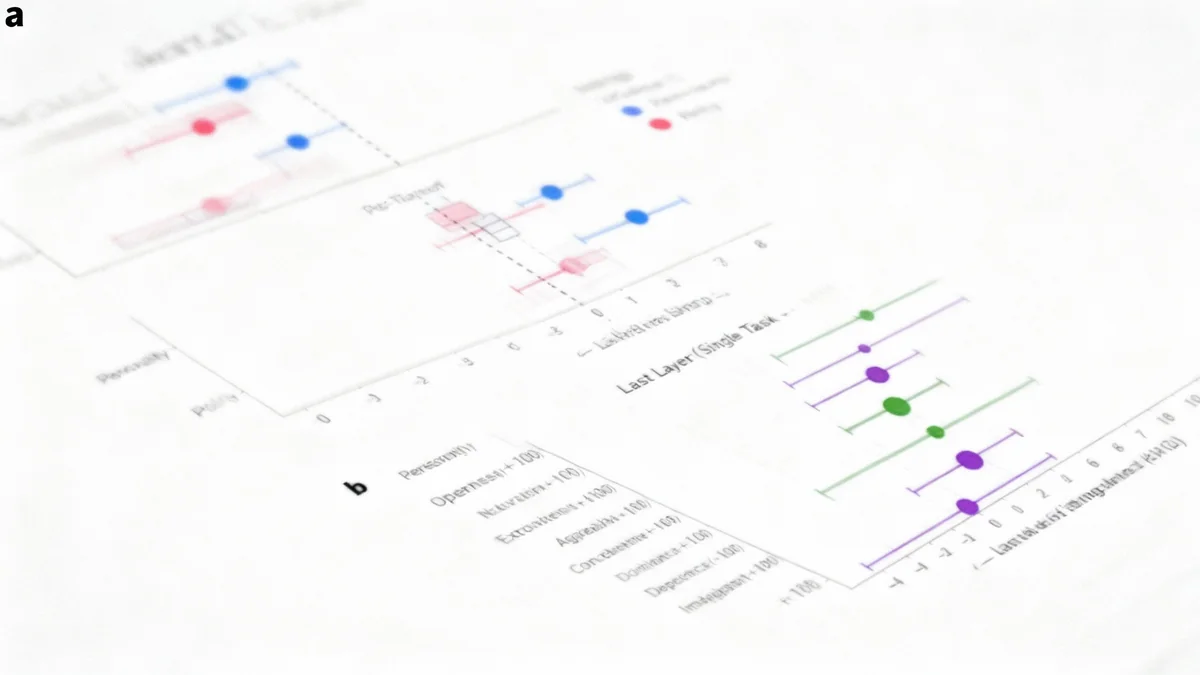

How AI Grades Student Essays

The automated scoring system for the MCAS exams does not involve a human reviewing every essay. Instead, the AI is trained using a large set of essays previously graded by human experts. The system learns the characteristics of essays at each score point. To maintain quality control, human graders review a sample—approximately 10%—of the AI-scored essays to check for consistency and accuracy. The recent glitch highlights a potential flaw in this process, where significant errors can occur in the 90% of essays not subject to human review.

Concerns from Parents and Educators

The use of AI to grade student writing has surprised and worried many parents and educators. Shaleen Title, an adjunct professor and a parent in Malden, expressed her astonishment upon learning about the practice. "It was news to me," she stated. "It was astonishing and concerning. I really hope that we get a lot more communication about this as time goes on."

Title and others have raised questions about the fairness and limitations of the technology. Key concerns include:

- Potential for Bias: There are worries that an AI system could inadvertently penalize students with disabilities or those who are learning English as a second language if their writing styles differ from the training data.

- Stifling Creativity: An automated system might struggle to recognize and reward creative or unconventional answers that don't conform to a rigid scoring rubric.

- Lack of Human Nuance: Critics argue that assessing writing, a deeply human and subjective skill, requires a human reader. "An essay is something that should be original and creative," Title added. "I think there should be a human looking at it."

"You know, machine learning is good for some things, but it’s really not good for some other things. It’s disrespectful to students because they are told not to use AI when they write and read."

Jim Kang, a Medford parent who audits AI evaluations professionally, echoed these sentiments. He pointed out the apparent contradiction of using AI to grade students who are often explicitly forbidden from using AI in their own work.

The State's Response and Path Forward

State education officials have acknowledged the issue and are working to prevent a recurrence. Education Commissioner Pedro Martinez described the incident as a "learning experience" and confirmed that DESE is collaborating with its testing vendor to implement better safeguards.

Impact by the Numbers

- 1,400: Approximate number of essays incorrectly scored.

- 192: Number of school districts affected.

- 10%: Portion of AI-scored essays that receive a second read by a human grader.

- 0: Number of student scores that were lowered after the manual rescoring process.

Despite the setback, Martinez indicated that the state does not plan to abandon the technology. Proponents of AI grading argue that it significantly speeds up the scoring process, allowing schools to receive results faster. This quick turnaround can help educators identify students needing intervention and adjust lesson plans more promptly.

However, for parents like Shaleen Title, the stakes are too high for errors. MCAS scores are used to evaluate students, teachers, and entire school districts, influencing everything from academic support to district-level performance benchmarks.

"This really matters. Students, districts and teachers are assessed based on these scores," Title explained. "If it’s not accurate, that really is unfair and affects people in our community." As the state moves forward, the incident has ignited a critical conversation about the role of artificial intelligence in education and the balance between technological efficiency and the need for human judgment.