A technical issue with an artificial intelligence grading system resulted in approximately 1,400 Massachusetts Comprehensive Assessment System (MCAS) essays receiving incorrect scores. The error, which affected a small fraction of the 750,000 total essays, was identified and corrected after a teacher noticed significant scoring discrepancies.

Key Takeaways

- A technical glitch in an AI system caused about 1,400 MCAS essays to be scored incorrectly.

- The error impacted students across 145 school districts, with an average of one to two students affected per district.

- The problem was discovered by a teacher in Lowell who flagged unusual scores during a review period.

- All affected essays have been rescored, and the issue was resolved by the state's testing contractor.

Technical Issue Affects Statewide Test Results

The Massachusetts Department of Elementary and Secondary Education (DESE) has confirmed that a temporary technical problem with its AI-powered essay grading software led to inaccurate scores. The issue impacted roughly 0.18% of the 750,000 essays submitted for the MCAS exams.

While the number of affected essays is relatively small, the impact was widespread, touching 145 school districts across the state. According to DESE, the error typically resulted in incorrect scores for one or two students in each of the affected districts.

By the Numbers

- Total Essays Graded: 750,000

- Essays Incorrectly Scored: ~1,400

- Districts Affected: 145

- Percentage of Essays Impacted: Approximately 0.18%

A spokesperson for DESE explained that the state has a system in place to catch such problems. "As one way of checking that MCAS scores are accurate, the Department of Elementary and Secondary Education releases preliminary MCAS results to districts and gives them time to report any issues during a discrepancy period each year," the spokesperson stated.

How an Alert Teacher Uncovered the AI Error

The scoring flaw was first brought to light in mid-July by an observant teacher at the Reilly Elementary School in Lowell. The teacher noticed that the preliminary scores for some student essays did not seem to align with the quality of the writing.

After being notified by the teacher, district leaders investigated further. Wendy Crocker-Roberge, Assistant Superintendent of Lowell Public Schools, described the severity of the errors they found. Some scores were off by a point or two, but one case stood out dramatically.

"The third essay, which was originally scored a zero out of seven, I re-rated as a six out of seven," Crocker-Roberge explained, highlighting the significant potential impact on an individual student's results.

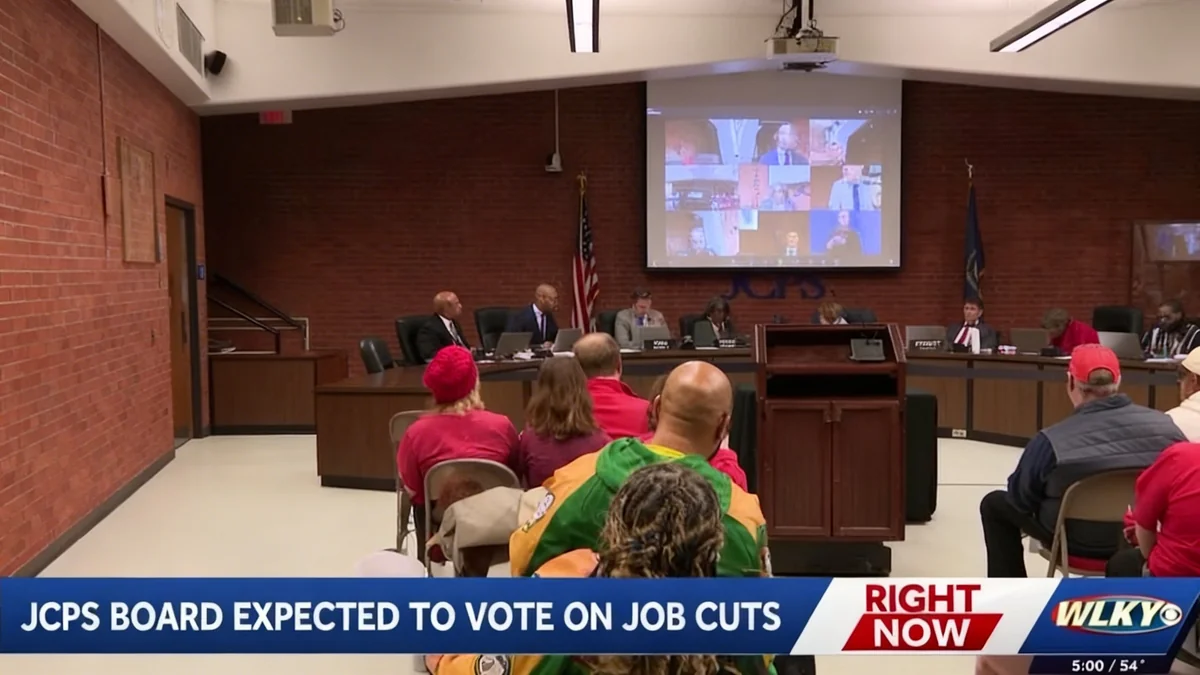

This discovery prompted Lowell Public Schools to report the findings to DESE during the designated discrepancy review period, initiating a statewide investigation into the AI grading system.

Corrective Measures and System Review

Once DESE was made aware of the issue, it immediately engaged its testing contractor, Cognia, to investigate. Cognia's team identified the technical problem and took steps to resolve it. The company re-evaluated all essays that were flagged by the system and conducted a broader review of randomly selected batches of essays to ensure no other errors remained.

The state confirmed that the issue was fully resolved by early August. All 1,400 affected essays were rescored by human graders to ensure accuracy before the final results were prepared for public release.

How AI Grades MCAS Essays

The use of AI for scoring MCAS essays is a relatively recent development. The system is trained on a large set of "exemplar essays" previously scored by human experts. The AI learns to identify the characteristics of essays at different score levels. To maintain quality control, 10% of all AI-scored essays are also reviewed by a human grader to check for inconsistencies and ensure the system is performing correctly.

Future of AI in High-Stakes Testing

This incident has sparked a conversation about the reliability of artificial intelligence in high-stakes educational assessments. Proponents argue that AI can process a large volume of tests quickly, providing faster feedback to schools and educators.

"AI grading certainly has the potential to turn important student performance data around for schools expeditiously, which helps schools to plan for improvement," Crocker-Roberge noted. However, she also expressed caution about the current system's limitations.

The Lowell Assistant Superintendent suggested that the current model, where humans review only 10% of AI-graded work, may need re-evaluation. "With time, AI will become more accurate at scoring, but it is possible that the 90-10 ratio of AI to human scoring is not yet sufficient to achieve the accuracy rates desired for high-stakes reporting," she said.

As technology continues to evolve, school districts and state education departments will likely continue to refine the balance between automated efficiency and human oversight. Final MCAS score reports are typically made available to students and families in the fall, and DESE has assured the public that the corrected scores will be reflected in those reports.